Big data, predictive analytics and machine learning have become ubiquitous buzzwords amid claims that they will revolutionise business models and society as a whole. The aim of using big data methods and data analytics is obvious. They help to measure the world and customers, create personality profiles and make real time predictions based on increasing volumes of data [for more details, see Eicher/Romeike 2016 and Jackmuth 2018, p. 366 f.]. Data analytics is all about analysing and visualising information obtained from large data volumes using statistical methods. This article provides an overview of the use of data analytics and a selection of fraud detection and early risk detection tools developed from it.

On the trail of fraudsters

Back in 1881, while working with books of logarithms the astronomer and mathematician Simon Newcomb discovered that the early pages were much more dog-eared and worn than the pages towards the back. He published this law on the distribution of digit structures of numbers in empirical data sets in the "American Journal of Mathematics". Numbers with the initial digit 1 occurred 6.6 times as frequently as numbers with the initial digit 9. Today, this law is known as Benford's Law or as Newcomb- Benford's Law (NBL).

This law of numbers is used in the real world today, for example to detect manipulations in accounting or tax returns, or data manipulations in science. For example, the creative accounting at the US companies Enron and Worldcom was uncovered using the NBL method. However, in practice Benford's Law only provides an initial indication – definitely not proof. More complex and better methods now exist that are more reliable at achieving the desired objectives.

Artificial intelligence methods, for example machine learning, using large data volumes (big data) are already being successfully deployed in risk management and fraud detection. This enables the forecasting quality of risk models to be significantly improved, particularly in identification of non-linear relationships between risk factors and risk events.

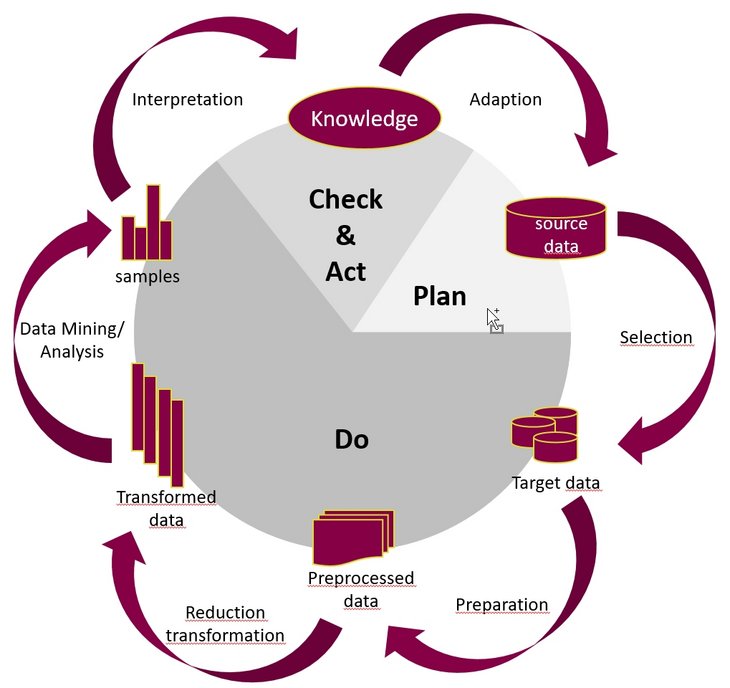

PDCA in data analytics: Integrated data analytics model

The hypothesis-free nature of determining a sequence of events can make it particularly difficult to come up with indicators and measurable objectives. In real life this generally means: "We want to identify as many cases as possible". And this is precisely where the analytical challenge lies. Does the population contain justiciable outliers and, if so, in what quantity?

We need to find methods of operationalising objectives and making them measurable. In practice, a modular combination of different methods, taking into account the type of application – ad-hoc, continuous/preventive and explorative analysis – in conjunction with the PDCA cycle and the KDD (knowledge data discovery) process has proved effective [for more details see Fayyad/Piatetsky-Shapiro/Smyth, 1996, p. 37 ff.]. This enables data analytics to be planned, performed and then evaluated and optimised using a defined and structured procedure [see Jackmuth 2018, p. 364].

The procedure is summarised in Tab. 01 [see Jackmuth 2018, p. 365].

Plan (planning)

| In the first phase, expert knowledge is used to perform an analysis of the current situation. The aim is to define hypotheses or specify topics for hypothesis-free analyses. Data sources are located and preliminary tests of the suitability of the data quality for the analysis are performed. In addition, the objectives or expectations for the analysis are set. |

Do (implementation)

| The data is prepared for analysis and is then evaluated using analysis methods (data mining, machine learning etc.). |

Check (review)

| The results obtained from implementation are reviewed and validated. Based on process knowledge, relationships are explained and the methodology used is checked for potential improvements. |

Act (improvement)

| Experiences from the review are used for implementation. The cycle is repeated until satisfactory results are achieved. |

Tab. 01: PDCA in data analytics

The KDD process, which is made up of five steps and the associated inputs and outputs, can be optimised using the standard PDCA model as shown in Tab. 01.

Integrated data analysis cannot be limited to only hypothesis-free analysis. For professional identification of fraud patterns, the use of process knowledge and creation of hypotheses derived from it has proved effective in practice. "Sound criminal thinking" – i.e. "learning from the best, in other words from the actual criminals – helps enormously in exposing crime.

This explicitly does not mean that hypothesis-free algorithms should not be used but that they should be supported by hypotheses and additional knowledge. An important characteristic of the KDD process is that it does not have to be linear and carried out in full at every iteration. Interruption and subsequent adaptation of preceding process steps are possible and useful.

For example, an analysis has identified that the data contains anomalous entries. These entries were each transferred to different bank accounts. Many of these bank accounts can be traced to what are known as direct banks, which often have no branch network and rely on third-party providers to verify new customers. Counterfeit documents potentially make some of the verification methods vulnerable to manipulation. For criminals, using direct banks for the target accounts makes good sense. Once this characteristic of the anomalous records has been identified, it needs to be put into a form that can be used for further analyses. A list of the sort codes for direct banks is added to the source data and is incorporated into the target data, i.e. the "Selection" step has been repeated and the subsequent steps are then performed again. In "Transformation/Reduction", the corresponding records for transfers to a direct bank have now been flagged and are available for the "Data Mining/Analysis" step (see Fig. 01) [see Jackmuth 2018, p. 366 f.].

Fig. 01: Integrated data analysis process

Fig. 01: Integrated data analysis process

To take these findings into account, the KDD process can be integrated into the PDCA cycle. The aim must be to obtain a continuous data analysis process in fraud management, to enable problems to be uncovered as early as possible. The methods are available in various (in some cases open source) products (for example the analysis platform at www.knime.com originally developed at the University of Konstanz). This enables the analysis process to be continuously improved and the analysis results to be optimised.

Methods and tools

Rule-based filter methodology

The professional body for IT auditing, the Information Systems Audit and Control Association (ISACA), has been stipulating a Control Continuous Monitoring (CCM) concept since 1978 (!). The procedure enables the performance of one or more processes, systems or data types to be monitored. In terms of forensic monitoring, it is based on a process-related method [see Jackmuth 2012, p. 632]. In an age when Microsoft® Excel tools could process just 16,384 rows, the possibility was to set intelligent data filters. However, from the analysts' perspective this requires the filters to actually cover the cases occurring.

To that extent, the flows of money leaving a company are the crucial criterion. To get to the criminals, you always follow the money – at least in electronic form. The extent to which data analytics can be classified as a preventive method in this case is down to the individual perspective. Software manufacturers are always keen to sell preventive tools, even if the rule-based approach only guarantees discovery after the first test case has occurred.

Data mining

Data mining is a form of data acquisition or extraction. While the methods most frequently used by companies still tend to follow a rule-based approach, data mining is not intended to put known hypotheses to the test but to discover totally new things that are hidden in the depths of a database. Usma Fayyad, one of the fathers of the methodology, describes it as follows: Data mining is "the non-trivial process of identifying valid, novel, potentially useful and ultimately understandable patterns in data." [Fayyad/Piatetsky- Shapiro/Smyth 1996, p. 6].

The aim is to systematically utilise methods (for example SOMs, Self Organisation Maps, a type of neural network) on a large data volume with the objective of identifying patterns, in order to detect hidden rules, recognise patterns and structures, and to establish variations and statistical anomalies.

Text mining

Special investigations often encounter the problem that several hundred documents have to be inspected and categorised, and important information has to be located. The use of text mining can support this process. Text mining is an umbrella term for a number of statistical and linguistic methods and algorithms that are designed to make language understandable and analysable for a computer.

Data Analytics for Fraud Detection and Early Risk Detection

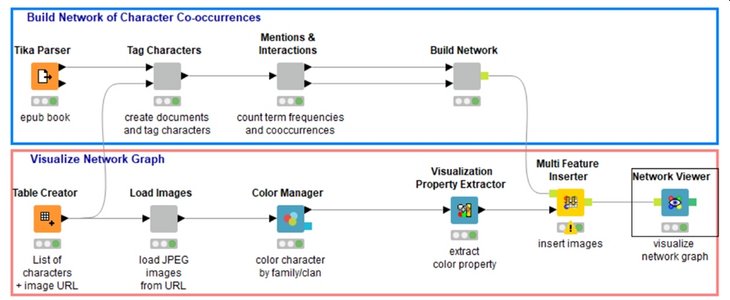

For special investigations, we regularly use analyses in the KNIME tool, for example to search several thousand pages in various documents for known persons.

The combinations and corresponding links between people can be identified automatically, thus revealing new relationships. The methodology used utilises the graph theory or social network analysis already described [see Romeike 2018, p. 110 ff.]. KNIME is based on a workflow principle (see Fig. 02). The data passes through different nodes and it is processed by the functionality of each node [see Jackmuth 2018, p. 402].

Fig. 02: Extract from the KNIME workflow

Fig. 02: Extract from the KNIME workflow

Summary and recommendations

Starting from the "Occupational Fraud and Abuse Classification System" tree published by the professional body ACFE, three main categories of economic crime can be identified in companies [see Kopetzky/Wells 2012, p. 52].

- Misuse of assets,

- Corruption and bribery,

- Financial data forgery.

However, if these factors are so clearly defined, risk and fraud management must be focused on identifying the causes as far as possible. Specifically, the key is to analyse the causes before the crime is actually committed. Providing budgets for prevention definitely makes better sense than covering the detection costs. In parallel, it is essential to strengthen the know-how in the company on effective use of the methods highlighted. Early identification of weak signals and causes using appropriate methods also saves costs [see Romeike 2019a].

The fraud triangle hypothesis developed in the last century is currently being extended based on methods from St. Gallen University. Various standards contain wordings such as "Economic crime is to be expected where there is motive, opportunity and also internal justification for committing the crime". Schuchter extends the hypothesis to create a fraud diamond – as well as opportunity, a successful act depends on the perpetrator's technical capabilities, for example circumventing the dual control principle or criminal access to IT systems.

It is vital to recognise these mechanisms and to know what makes criminals tick [for more details see Schuchter 2012] and, based on the rapid growth in available data resulting from digitalisation of business models, the opportunities for data analysis are also growing. We have outlined the benefits that companies can derive from these developments in the context of risk management and fraud detection. Deep learning, a sub-section of machine learning, provides further support. Architectures in the form of neural networks are frequently used in this area. Therefore, deep learning models are frequently referred to as deep neural networks [for more details, see Schmidhuber 2015 and Romeike 2019a].

However, this requires companies to develop a new understanding of data analysis and to recognise and take advantage of the opportunities it presents. The coming years will show that data analysis know-how is becoming a crucial key competence (not exclusively) for risk managers and compliance experts. There will be fewer quants and risk managers using purely technical methods; instead quant developers and data managers/scientists will be the risk managers of the future. It will be up to them to develop robust data analytics models with a high forecasting quality.

Literature

- Fayyad, Usama/Piatetsky-Shapiro, Gregory/Smyth, Padhraic [1996]: From Data Mining to Knowledge Discovery in Databases, in: AI Magazine, American Association for Artificial Intelligence, California, USA, p. 37-54.

- Jackmuth, Hans-Willi [2012]: Datenanalytik im Fraud Management – Von der Ad-Hoc- Analyse zu prozessorientiertem Data-Mining [Data analytics in fraud management – From ad-hoc analysis to process-based data mining], in: de Lamboy, C./Jackmuth, H.-W./Zawilla, P. (ed.): Fraud Management – Der Mensch als Schlüsselfaktor gegen Wirtschaftskriminalität [Fraud management – People as the key factor in combating economic crime], Frankfurt am Main 2012, p. 627-662.

- Jackmuth, Hans-Willi/Parketta, Isabel [2013]: Methoden der Datenanalytik [Data analytics methods] in: de Lamboy, C./Jackmuth, H.-W./Zawilla, P. (ed.): Fraud Management in Kreditinstituten [Fraud management in banks], Frankfurt am Main 2013, p. 595-620.

- Jackmuth, Stefan [2018]: Integrierte Datenanalytik im Fraud Management [Integrated data analytics in fraud management], in: de Lamboy, C./Jackmuth, H.-W./Zawilla, P. (ed.): Fraud und Compliance Management – Trends, Entwicklungen, Perspektiven [Fraud and compliance management – Trends, developments, perspectives], Frankfurt am Main 2018, p. 345-407.

- Kopetzky, Matthias/Wells, Joseph T. [2012]: Wirtschaftskriminalität in Unternehmen. Vorbeugung & Aufdeckung [Economic crime in companies. Prevention & detection], 2nd edition, Vienna 2012.

- Newcomb, Simon [1881]: Note on the Frequency of the Use of different Digits in Natural Numbers. In: American Journal of Mathematics (Amer. J. Math.), Baltimore 4.1881, p. 39-40.

- Romeike, Frank [2017]: Predictive Analytics im Risikomanagement – Daten als Rohstoff für den Erkenntnisprozess [Predictive analytics in risk management – Data as the raw material for the detection process], in: CFO aktuell, March 2017.

- Romeike, Frank [2018]: Risikomanagement [Risk Management], Springer Verlag, Wiesbaden 2018.

- Romeike, Frank [2019]: Risk Analytics und Artificial Intelligence im Risikomanagement [Risk analytics and artificial intelligence in risk management], in: Rethinking Finance, June 2019, 03/2019, p. 45-52.

- Romeike, Frank [2019a]: Toolbox – Die Bow-Tie-Analyse [Toolbox – The Bow Tie Analysis], in: GRC aktuell, February 2019, 01/2019, p. 39-44.

- Romeike, Frank [2019b]: KI bei Datenschutz und Compliance [AI in data protection and compliance], in: Handbuch Künstliche Intelligence [Artificial intelligence handbook], Bonn 2019, Internet: handbuch-ki.net

- Schmidhuber, Jürgen [2015]: Deep learning in neural networks: An overview, in: Neural Networks, 61, 2015, p. 85.

- Schuchter, Alexander [2012]: Perspektiven verurteilter Wirtschaftsstraftäter: Gründe ihrer Handlungen und Prävention in Unternehmen [Perspectives of convicted economic criminals: Reasons for their actions and prevention in companies], Frankfurt am Main 2012.

- Schuchter, Alexander [2018]: Wirtschaftskriminalität und Prävention. Wie Führungskräfte Täterwissen einsetzen können [Economic crime and prevention. How managers can use criminals' knowledge], Wiesbaden 2018.

Authors:

Hans-Willi Jackmuth | Managing Director | addResults GmbH | Rösrath / Cologne

Frank Romeike | Managing Director | RiskNET GmbH | Board Member | Association for

Risk Management & Regulation | Munich and Frankfurt am Main

[Source: FIRM Yearbook 2020, p. 187-190]